If you’re not A/B testing your site, you’re leaving money on the table.

The only way to truly evaluate your conversion funnel and marketing campaign is to get data directly from your customers behavior.

A/B testing lets you do just that.

Start using the best A/B testing tool here

What is A/B Testing?

A/B testing (also known as split testing) is the process of comparing two versions of a web page, email, or other marketing asset and measuring the difference in performance.

You do this giving one version to one group and the other version to another group. Then you can see how each variation performs.

Think of it like a competition. You’re pitting two versions of your asset against one another to see which comes out on top.

Knowing which marketing asset works better can help inform future decisions when it comes to web pages, email copy, or anything else.

How Does A/B Testing Work?

To understand how A/B testing works, let’s take a look at an example.

Imagine you have two different designs for a landing page—and you want to know which one will perform better.

After you create your designs, you give one landing to one group and you send the other version to the second group. Then you see how each landing page performs in metrics such as traffic, clicks, or conversions.

If one performs better than the other, great! You can start digging into why that is, and it might inform the way you create landing pages in the future.

Why Do You Really Need To Do A/B Testing?

Creating a website or email marketing campaign is just the first step in marketing. Once you have a website, you’ll want to know if it helps or hinders sales.

A/B testing lets you know what words, phrases, images, videos, testimonials, and other elements work best. Even the simplest changes can impact conversion rates.

One test showed that a red CTA button outperformed a green one by 21 percent based on 2,000 page visits.

If such a minor change can get people to click, you’ll want to know what other elements of your page might have an impact on conversions, traffic, and other metrics.

Split Testing vs AB Testing: What Are the Types of Tests?

The terms “split testing” and “A/B testing” are often used interchangeably. They’re actually two different types of tests.

A/B testing involves comparing two versions of your marketing asset based on changing one element, such as the CTA text or image on a landing page.

Split testing involves comparing two distinct designs.

I prefer A/B testing because I want to know which elements actually contribute to the differences in data. For instance, if I compare two completely different versions of the same page, how do I know whether more people converted based on the color, the image, or the text?

A/B Testing Statistics: What Are Champions, Challengers and Variations?

The statistics or data you gather from A/B testing come from champions, challengers, and variations. Each version of a marketing asset provides you with information about your website visitors.

Your champion is a marketing asset — whether it’s a web page, email, Facebook Ad, or something else entirely — that you suspect will perform well or that has performed well in the past. You test it against a challenger, which is a variation on the champion with one element changed.

After your A/B test, you either have a new champion or discover that the first variation performed best. You then create new variations to test against your champion.

[tweet_box design=”default”]Many people think that you can get away with a single A/B test. In reality, you should be continuously testing so you can optimize your marketing and advertising creative for your audience.[/tweet_box]

Which Are the Best Elements to A/B Test?

Some elements of a marketing asset contribute to conversions more than others. Changing one word in the body copy of an email, for instance, probably won’t make much of a difference in conversion or click-through rates.

Since you have limited time, devote your energy to the most impactful elements on your web page or other marketing asset. To give you an overview of what to focus on first, let’s look at ten of the most effective A/B testing elements.

1. Headlines and copywriting

Your headline is the first thing people see when they arrive on a web page. If it doesn’t grab your visitors’ attention, they won’t stick around.

If you’re feeling stuck, check out my video on writing catchy headlines. It’ll give you some ideas for coming up with variations on your existing headlines.

Other aspects of copywriting can also impact conversions. For instance, what text do you use on your CTA button, or as anchor text for your CTA link?

Test different paragraph lengths and different levels of persuasion. Does your audience prefer the hard sell or a softer approach? Will you win over prospects with statistics or anecdotal copy?

2. CTAs

Your call to action tells readers what you want them to do now. It should entice the reader to act on your offer because it offers too much value to resist.

Changing even one word in your CTA can influence conversion rates. Other characteristics — such as button color, text color, contrast, size, and shape — can also have an impact on its performance.

Don’t change multiple qualities during one A/B test. If you want to test background color, don’t change the font or link color, too. Otherwise, you won’t know which quality made the difference in your A/B testing data.

3. Images, audio, and video

I strongly believe in omnichannel marketing. Since I know I can’t reach every single member of my target audience through SEO-optimized text, I also create podcasts, videos, and infographics.

If you have a library of videos at your disposal, one good idea is to A/B test video testimonials against written ones, or short infographics against longer versions.

If you don’t have a video channel or resource page yet, even stock images can impact your A/B testing. For instance, if you have a photo of someone pointing at your headline or CTA, the image will naturally draw viewers’ eyes toward that element.

All of these A/B testing experiments can help you figure out what your audience responds to.

4. Subject lines

Email subject lines directly impact open rates. If a subscriber doesn’t see anything he or she likes, the email will likely wind up in the trash bin.

According to recent research, average open rates across more than a dozen industries range from 25 to 47 percent. Even if you’re above average, only about half of your subscribers might open your emails.

A/B testing subject lines can increase your chances of getting people to click. Try questions versus statements, test power words against one another, and consider using subject lines with and without emojis.

5. Content depth

Some consumers prefer high-level information that provides a basic overview of a topic, while others want a deep dive that explores every nook and cranny of the topic. In which category do your target customers reside?

Test content depth by creating two pieces of content. One will be significantly longer than the other and provide deeper insight.

Content depth impacts SEO as well as metrics like conversion rate and time on page. A/B testing allows you to find the ideal balance between the two.

This doesn’t just apply to informational content, such as blog pages. It can also have an impact on landing pages.

At Crazy Egg, we ran an A/B test on our landing page to see whether a long- or short-form page would work better.

The longer page on the right — our challenger — performed 30 percent better. Why? Because people needed more information about Crazy Egg’s tools to make an informed decision.

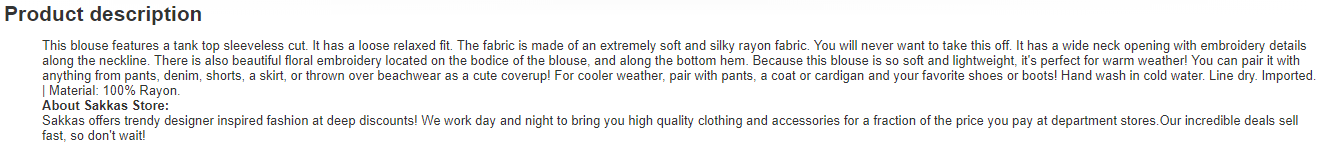

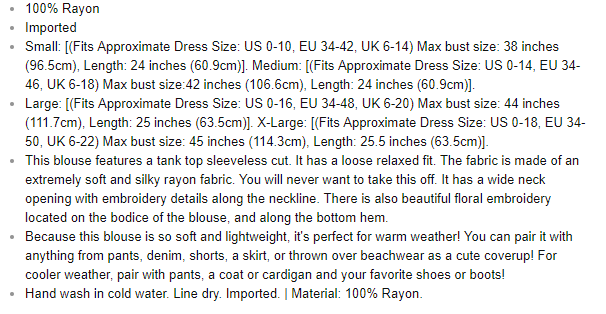

6. Product descriptions

The opposite can be said of product descriptions.

In e-commerce, short product descriptions tend to work best. Consumers want simple, easy-to-digest content that gives them the highlights about a product.

You see brief product descriptions on major sites like Amazon all the time.

If you ask me, this product description could benefit from more white space, but it’s short and easy to read.

However, if you have a more complicated product than, say, a blouse, you might need to get into more detail. Test longer descriptions against shorter ones to see which converts better.

Additionally, you can test product description design. Try testing paragraph copy against bullet points, for instance. At the top of the page referenced below, we see the highlights in bullet format.

Even something as simple as bullet points can affect conversion rates.

7. Social proof

Did you know that 70 percent of consumers rely on opinions they read in online reviews to make purchase decisions?

Displaying social proof on your landing pages, product pages, and other marketing assets can increase conversions, but only if you present it in an appealing way.

A/B test star ratings against testimonials, for instance. You could also test videos vs. static images with quotes.

8. Email marketing

It’s easy to A/B test your marketing emails. You just send version A to 50 percent of your subscribers and version B to the rest.

As I mentioned earlier, even the simplest changes to your email signup form, landing page, or other marketing asset can impact conversions by extraordinary numbers. Let’s say you run an A/B test for 20 days and 8,000 people see each variation. If Version A outperforms Version B by 72 percent, you know you’ve found an element that impacts conversions.

The conclusion is based on three facts:

- You changed just one element on the page or form.

- Equal numbers of people saw each variation.

- The test ran long enough to reach statistical significance.

You won’t know unless you test. Presenting different versions of copy or imagery to your audience at the same time produces scientifically viable results.

9. Media mentions

It’s a great feeling when your business, product, or service appears in a major publication, whether online or off. You want people to know about it, but you also have to present the information clearly and effectively.

Try A/B testing different pull quote designs. You could also test mentioning the publication’s name versus using its logo.

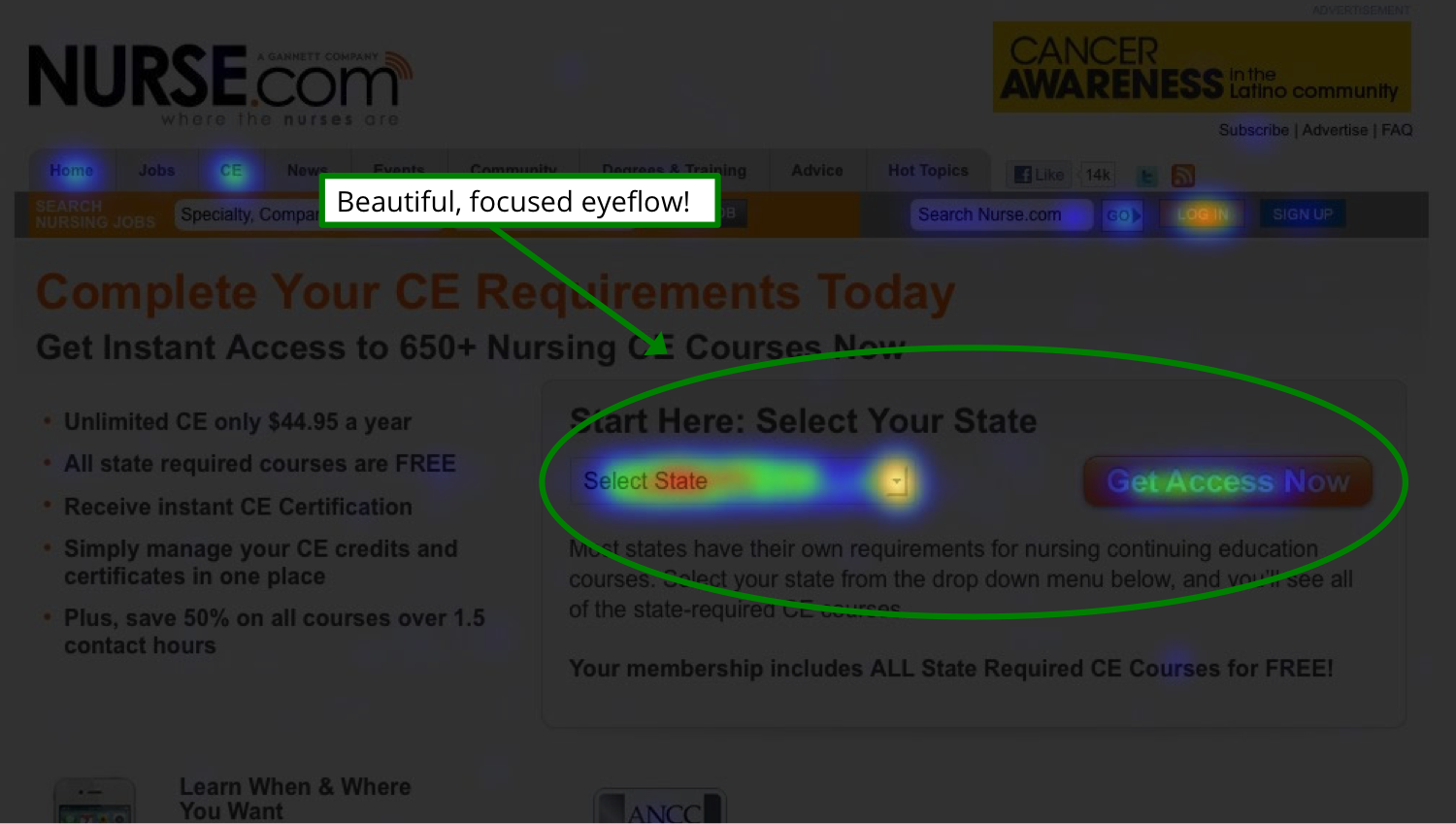

10. Landing pages

Your landing pages need to convert users on whatever offer you present them with. If they don’t, you lose a potential sale.

A heat map can show you where people are clicking on your landing pages. Collecting this data before you run an A/B test will make your hypothesis more accurate and tell you which elements are the most important to test.

You’ll see where peoples’ eyes focus on the page so you can put your most important element, such as the CTA, there.

How to Do A/B Testing in 2018

A/B testing isn’t complicated, but you need a strategy before you begin. Start with a hypothesis. Which version of your web page or other marketing asset do you believe will work better? And why?

You could also start with a question, such as, “Why isn’t my landing page converting?” You might have significant traffic, but no click-throughs on the CTA. In that case, making changes can help you collect more data about your visitors’ experience on the page.

I don’t recommend running A/B tests without first understanding how your pages are performing. Use Google Analytics to track traffic, referral sources, and other valuable information, then run heat maps, scroll maps, and other tests to see how visitors interact with your site.

Individual visitor session recordings can also be useful. Seeing exactly what a visitor does when he or she lands on a specific page can give you additional insights into where customers may be getting stuck or frustrated.

Armed with knowledge of how your customers are responding to your current marketing strategy and insights on the areas that need improvement, you can start A/B testing to boost your conversion rates and bring in more revenue. Here’s how.

1. Choose what you want to test

Start with a single element you want to test. Make sure it’s relevant to whatever metric you want to improve.

For instance, if you’re trying to generate more organic traffic, focus on an element that impacts SEO, like blog post length. For conversion rate optimization, you might begin with the headline, a video, or a CTA.

2. Set goals

What do you want to achieve with your A/B test? Are you interested in improving conversion rates? Sales? Time on page?

Focus on a single metric at first. You can always run A/B tests later that deal with other metrics. If you concentrate on one thing at a time, you’ll get cleaner data.

3. Analyze data

Look at your existing data, using a free tool like Google Analytics. What does it tell you about your current state based on the metric you want to improve?

This is your starting point or base line. You’re looking for a change that will move the needle, even if only by a small margin.

4. Select the page that you’ll test

Start with your most important page. It could be your homepage or a highly trafficked landing page. Whatever the case, it should have a significant impact on your business’s bottom line.

5. Set the elements to A/B test

Choose which elements you’ll A/B test on your champion (remember: this is the existing content that you want to alter slightly to see if your metrics improve) and in what order. Start with the elements you think are most likely to influence the target metric.

6. Create a variant

Next, create a variant of your champion. Change only the element you decided on in the previous step and make only one change to it.

If you’re testing the CTA, change the background color, font color, or button size. Leave everything else identical to the champion.

7. Choose the best A/B testing tools

The right A/B testing tool depends on what exactly you’re testing. If you’re A/B testing emails, most email service providers have built-in testing tools.

You might also use Crazy Egg to A/B test landing pages and other areas of your website. The main advantage to this approach is that the Crazy Egg algorithm will start funneling the majority of traffic to the winning variation automatically, so you won’t need to check in on the test frequently or worry that you might be directing people to a less effective version of your current content.

8. Design your test

Depending on the tool you’re using, you might have lots of influence over the test design or very little. For instance, you might need to specify how long you want the test to run, which devices you want to collect data from, and other details.

9. Accumulate data

This is the wait-and-see period. With A/B testing softwares like Crazy Egg, data gets collected automatically. You can view the progress of your test at any time, and when the test concludes, you’ll get data about how many people visited each variation, which devices they used, and more.

10. Analyze the A/B testing statistics

Draw conclusions based on which variation won: the champion or the challenger. Once you better understand which version your audience liked better — and by what margin — you can start this 10-step process over again with a new variant.

How Long Should Your A/B Tests Run?

For most A/B tests, duration matters less than statistical significance. If you run the test for six months and only 10 people visit the page during that time, you won’t have representative data. There are just too few iterations on which to base a conclusion.

However, you want the test to run long enough to make sure there’s no evidence of results convergence. This happens when there appears to be a significant difference between the two variations at first, but the difference decreases over time.

If you see convergence during your test, you can conclude that the variation didn’t have a significant impact on the metric you’re tracking.

A/B testing for several weeks will give you good results as long as you have steady traffic.

Consider looking at A/B testing examples from other websites. Many companies publish their findings on marketing blogs like this one so others can benefit from them.

You don’t want to copy a test exactly, but you’ll get a good idea of what to expect.

How Do You Analyze Your A/B Testing Metrics and Take Action?

You want to collect as much data from A/B testing as possible. How many visitors did each variation receive? By what percentage did the winner outperform the loser?

A difference of 4 percent might indicate that your audience had no preference for one over the other. If one outperforms the other by 40 percent, however, you’ve learned something valuable about the element you tested.

Conclusion

A/B testing is one of the most powerful ways to collect information about your copywriting and design choices. I use it constantly on all of my websites as well as on my clients’ sites.

Following the right procedure is critical if you want accurate results. Feel free to print out this handy step-by-step guide so you remember each part of the process:

- Choose what you want to test

- Set goals

- Analyze data

- Select the page that you’ll test

- Set the elements to A/B test

- Create a variant

- Choose an A/B testing tool

- Design your test

- Accumulate data

- Analyze the A/B testing statistics

Following these steps in order will unlock numerous benefits:

- You can start learning more about what convinces your audience to convert

- You can streamline the user journey through your website and reduce friction for your potential customers

- Most importantly to your business, you can improve your conversion rates and boost your bottom line!

Start your A/B testing right now!